If you’re a screenwriter—or a car salesman—you’re already thinking of ways to write non-sci-fi self-driving cars into a movie script. Automobiles have been integral to the plots of gritty noir crime movies, heist flicks, romantic comedies, and obviously, road movies.

What’s clear is the self-driving car won’t be the ideal getaway vehicle anymore, particularly if there is no steering wheel or gas pedal. And while it’s not hard to imagine a world in which technology controls people, the alternative script involves self-driving cars that are pushed around by human drivers, pedestrians, scooters, or even bicyclists.

“You can bully self-driving cars,” said Peter Hancock, Provost’s Distinguished Research Professor and head of the MIT² Laboratory at the University of Central Florida (UCF), where he studies autonomous vehicles and psychology. “Once you know the way it is going to react, you can move it over in its lane or make it decelerate, because you can be the bigger bully and you know that it is going to avoid hitting you. If you start edging into the lane it is going to start edging away from you.”

Who should fear whom? For now, people seem to be afraid of the self-driving car, and the numbers don’t seem to be improving. AAA reported in May 2018 that Americans are more afraid to ride in a fully autonomous vehicle than they were the prior year. In fact, AAA said 73% of driving Americans are afraid to ride in a fully self-driving vehicle.

“There may be some people who never trust an automated system,” said Hancock, who studies dimensions of trust of systems and humans in his lab. “When Tesla and Waymo are marketing, they have to market to people who are going to trust those systems.”

But if anyone needs to be afraid, it might be weighted heavily against self-driving car OEMs and their programmers.

“This will be a big problem, and so far we have not seen plans for a solution,” said Ty Garibay, chief technology advisor at ArterisIP. “The big problem is robots or autonomous things interacting with people. And while this may not be a problem in a factory, because people are paid to work with robots, it’s definitely an issue with autonomous vehicles in cities. I don’t see how autonomous vehicles will work in an urban area. You see the same thing happening in the home market with robots if a child knows it won’t do anything.”

The reason is simple—human beings are unpredictable, aggressive, and sometimes malicious creatures.

Mixed driving styles

This presents a problem because self-driving cars and human drivers will share the road for many years. “It’s going to be very interesting as we look to the future with that mixed fleet because the autonomous cars are driven and behave differently than their human counterparts, and that’s often a good thing,” Greg Brannon, director of automotive engineering at the American Automobile Association, told Semiconductor Engineering. “Self-driving cars are more cautious. They are more likely to obey speed limits. They are less likely to be distracted. All those things are good. But they will stand out among human-driven counterparts for some time, until the programming develops in the sensor fusion and all of the artificial intelligence to the point that they mimic a safe human driver.”

Different driving styles add to the complexity self-driving car programmers have to contend with. Cities and states vary in what is expected and how drivers interact. Human drivers and pedestrians have to learn these styles when visiting from a different place. It’s the same for a self-driving car?

“I just returned from in Italy,” said Hancock. “I did bit of driving myself. How does a self-driving car ever work in Italy, because you are constantly being cut off by Vespas?” He concluded it’s faster to walk.

There’s an unwritten code between humans that use the transportation system, he said, using Italy as an example. At first, such local systems don’t make sense to the stranger. But then the driver learns local ways drivers communicate with each other. Drivers in Italy don’t allow slow drivers to sit in the fast lane. A human gradually learns to appreciate why the local customs exist, but the self-driving car needs that to be programmed into its logic.

“If you traveled overseas or traveled enough around the U.S., you can begin to realize that different geographies have different driving styles and likely for the automation to function well, the automation might have to be geographically oriented,” Brannon agreed. ”In fact It’s a very complex task but as we look towards the future, that’s something to be considered.”

“Imagine a car—an autonomous vehicle—that waits for the proper break in traffic before it enters the Holland Tunnel. That autonomous car likely will be there for the next decade before it gets into the tunnel.” laughed Brannon. “There’s all those things that will have to be worked out. So it will be a mix and there’s a lot of very, very smart people that are working in this space but it is a big challenges.”

Cars will have to adapt, or they will have to be regulated.

“There are two scenarios unfolding here,” said Burkhard Huhnke, vice president of automotive strategy at Synopsys. “One is happening in China, where you can have a fully autonomous city and avoid hybrid mixes of human drivers and autonomous vehicles. The other is when you have a hybrid world of human-driven and autonomous vehicles, which is where we are today. The average time a car stays in the market is eight years, and within the next eight years you’re going to see more and more autonomous technology coming into the market. The big question is how that will affect your daily commute.”

It’s not just people that will cause the problems, though. How autonomous vehicles interact with other autonomous vehicles has yet to be ironed out, too.

“If your maximum speed is 10% lower than you competitor’s car, that can cause a problem,” Huhnke said. “Even with acceleration, does it go full-throttle or smoothly? Programming everything to work together is quite a challenge. Humans see a speed limit sign and sometimes we think we can go faster. So it’s a question of interpretation of rules. With self-driving cars, you have to build in flexibility to be able to deal with that.”

Bullying the robocar

Autonomous vehicles are expected to be predictable, polite and patient drivers. And humans will quickly learn how to push these types of suckers around. Will pedestrians start jaywalking more and jumping in front of self-driving cars that stop no matter what?

“Gosh, I sure hope not,” said AAA’s Brannon. “There’s something called physics that is involved, as well. Regardless of the technology, that’s very difficult to overcome.”

That may boil down to how human-like the technology can become. “The key right now is that people can tell it’s an autonomous car,” said Jack Weast, chief systems architect of autonomous driving solutions at Intel. “They drive conservatively, which makes it a target. There’s a technical reason for that, which is decision-making algorithms are implemented using reinforcement learning. It’s basically reward behavior, which could be time to destination or fuel economy. The problem with AI systems is that they’re probabilistic, so you’re dealing with a best guess. There are multiple sensors to recognize cars and pedestrians, so if one sensor misses another picks it up. But there is no redundancy in the decision-making. And because a wrong decision may cause an accident, the weight for safety has a higher reward. That’s why autonomous vehicles are overly conservative. You see them jerking back into their lane. You also see human drivers getting annoyed by them.”

Intel’s approach to solving this problem is to create an AI stack, rather than an end-to-end system, establishing a hierarchy of AI functions. At the top is what it calls Responsibility Sensitive Safety, which the company has published as open source.

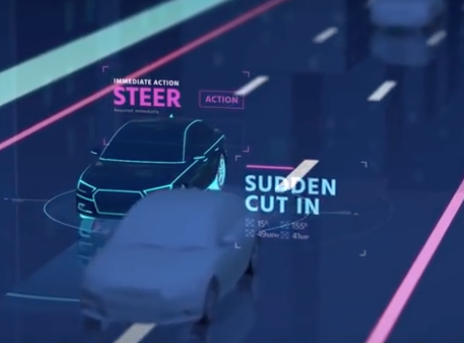

Fig. 1: Dealing with unpredictability using RSS. Source: Intel/Mobileye

“This allows a car to be more assertive and human-like,” said Weast. “If I’m sitting in the back seat of an autonomous car I can’t tell if it’s being driven by a person or whether it’s autonomous. This is where we’re heading. It’s a mixed environment future. Even in New York City, a human driver will not hit the brake pedal until the last possible second. Autonomous vehicles can do this, too. They can wedge into traffic to create space, look for a vehicle to give way, and back up into their lane if they don’t. The origins of this approach go back to safety, and the safety value of RSF is that it can be formally verified. This is a lot different than the current industry approach of, ‘Here’s a black box, trust me.’ It doesn’t matter if you’ve driven 1 million miles. End-to-end AI approaches are dangerous. You need layering or stacking or stages, so if one algorithm changes the independent safety seal remains static.”

Another issue is the safest traveling distance, said UCF’s Hancock. A safe traveling distance can be really far behind the car it is following—or just one foot away. “The reason one foot proves to be safe is because the car in front can only change velocity a very little amount, so there is not enough space to cause a collision.” If a one-foot driving distance is also one of the optimal following distances that the automated vehicle can choose, it can cause panic unless the human driver knows this is a safe self-driving car behind him.

Siemens has developed a verification and simulator system that helps discover edge cases with virtualization engine and simulator. These edge cases can be used for training self-driving cars. When Google drives millions of road tests, “what they’re looking for is the edge cases where some complex traffic scenario appears pedestrians and whatever else,” said Andrew Macleod, director of automotive marketing at Mentor, a Siemens Business. “That can all be done in the virtual world, so Siemens has got a virtualization capability where we can create vehicle scenarios against pedestrians coming in front of a vehicle at a specific speed, and so on. We can simulate all of the different data from sensors, whether it’s a camera, radar, LiDAR. And then we can add weather, for example. How does the camera sensor respond if it is snowing? We model all of that and create these edge cases, and then feed that data into a vehicle simulator to see how the vehicle responds. For example, you might have some scenario where the vehicle has to swerve to avoid the obstacle, pedestrian or whatever else. We can actually model how the vehicle behaves in an extreme example.”

What isn’t obvious is how people will react. “Automotive OEMs are dealing with this today from the driver and pedestrian side,” said Tim Lau, senior director of automotive product marketing at Marvell. Current collision avoidance systems may stop the human driver from trying the bullying. “They really want to have systems that predict what the driver intends to do. To be able to predict that, there’s a lot of information that the car needs to understand, including the driver’s state. There are so many technologies out that, like ADAS for collision avoidance.”

But the pedestrian who bullies? “Once I asked the car OEMs how they protect for that,” said Lau. “What is the plan? The systems are designed to protect and gather data and make best decisions, but if someone wants to be malicious and step in front of the car, I don’t think there is a system in place today that can deal with that.”

Creating systems that communicate with pedestrians can help. These so-called V2x systems have many uses, and there are a variety of technologies under development around the world. “This will be different technologies, like the smart garage has sensors that tell you what spot is taken or not,” said Lau. “Having that ability to have car-to-car, car-to-person, car-to-infrastructure communication is vital. It can tie into your cell phone or watch. Even the visibility around a corner will be possible.”

The real turning point, though, will be the smart city. “What the smart city offers you that sensors like LiDAR and camera and radar don’t is vehicle-to-vehicle communication and vehicle-to-infrastructure,” said Mentor’s Macleod. “The question of autonomous vehicles bullying each other and nudging each other on the road wouldn’t happen once we can communicate with each other because the most efficient path to get from A to B will be defined, with a driving scenario mapped out in terms of when to accelerate and when to brake. Eventually, traffic lanes probably wouldn’t be needed. The cars will just communicate with each other.”

Reaching for Level 5

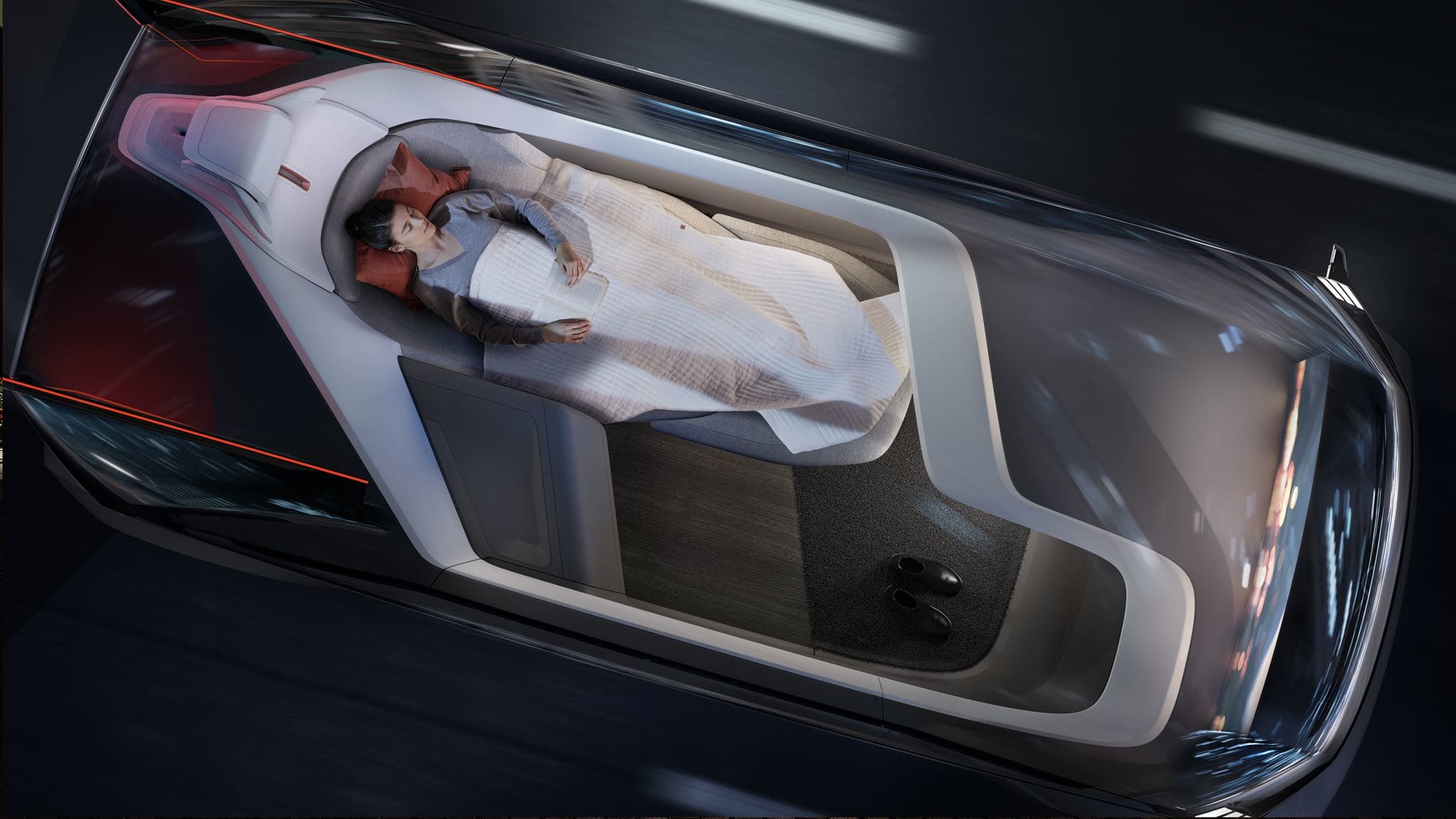

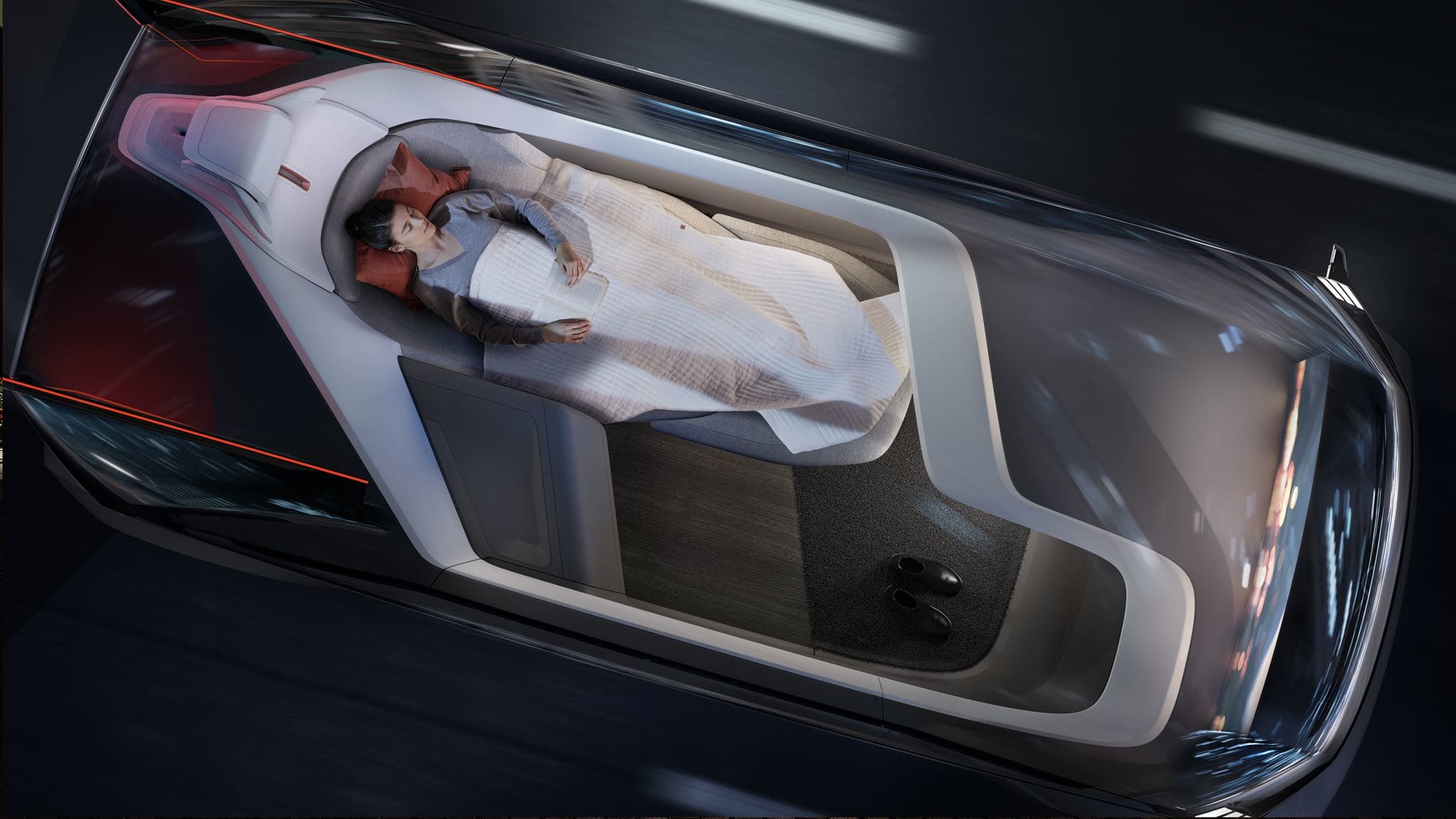

Despite Volvo’s recently announced concept for autonomous commuter pods, where passengers can sit at a table or sleep in a bed as a vehicle traverses hundreds of miles of roadway, the short-term future appears to be much more modest. Most likely the first self-driving cars will be used for ride sharing in limited areas of a city. Along with that, fleets of trucks that cruise the freeways at night are likely to deliver goods to a simple end point.

Fig. 2: Falling asleep at the wheel has new meaning in Volvo’s concept robocar 360c. Source: Volvo

“We don’t think it’s going to be anything like there’ll be more self-driving cars and all of a sudden the streets will be flooded with them everywhere,” said Macleod. “But you can put a bunch of self-driving trucks on the road at night going from point A to Point B very predictable. After that, campuses and maybe airports, followed by something like a downtown area maybe three to five square miles, which would be fully autonomous with autonomous robotaxis.”

This matches Ford’s current prediction—urban mobility and delivery services—as possible first uses for the automated system. The company is working on this plan with startup Argo AI. Ford has set a goal of having self-driving cars on the road by 2021, but Bryan Salesky, Argo’s CEO said the technology won’t be released until it is ready

“Every day that we test we learn of and uncover new scenarios that we want to make sure the system can handle,” said Salesky. Ford is testing the cars in Miami-Dade County in Florida. Even then, the car will drive in certain cities in certain closed geo-fenced locations and will function as a ride sharing service. The other option is automated trucking at night.

Ford uses two humans inside the test car monitoring what is happening in the road tests and ready to take over the driving if needed. The humans report back to Argo if odd interactions happen around the vehicle concerning pedestrians or other vehicles. “In the cities we operate in, we haven’t seen any reactions that are different than what you would normally see,” said Salesky. “They very much treat us like any other vehicle. We have actually gotten a positive response from the community in that our vehicles are cautious. They don’t get distracted. They are able to stop with plenty of margin before a crosswalk to let people use the crosswalk. Our car won’t pull into a crosswalk and block it. Our vehicle will nudge over and give pedestrians who are jogging along the side of the road a little more room.”

Ford is also experimenting with communicating with pedestrians using lights. This still has a long way to go. “What we do is over time is build up this database of regression tests that need to pass before a new line of code can be added to the system. That scenario set is a really critical piece of our development process,” said Salesky, who got his start working on DARPA vehicles as part of Carnegie Mellon University National Robotics Engineering Center and on Google’s self-driving car.

Argo and Ford don’t push out code to the cars before it is tested thoroughly and shown to be error-free, even if the change could be a simple change that fixes one problem. Argo owns its own AI database and coding.

Will self-driving cars save lives?

The semiconductor and automotive industries contend that self-driving vehicles will save lives. Some academics agree that the conservative, law-abiding self-driving car will do just that. But there’s a catch.

There are about 35,000 to 40,000 vehicle-related fatalities a year in the United States, depending on who’s counting. The National Safety Council is spearheading an effort to get the number down to zero by 2050 with the Road to Zero coalition. But drill down into those numbers and motorcycles were involved in 14% of the deaths in 2014, says NSC. Moreover, almost half of roadway deaths occur on rural roads, which are the most dangerous roads to drive on.

So will self-driving cars make a dent in those numbers any time soon? Where speed and impairment (drunk, drugged, drowsy, or texting) are factors in the deaths, where the self-driving car may have a big role to play. The coalition concluded that autonomous driving assistance systems will help, it noted they are only part of the solution. They also want to engender a culture of zero tolerance for unsafe behavior, along with better roadways and infrastructure. (Their report is here).

“We continue to see deaths on the American roadway, some 35,000 annually, and somewhere between 80% and 90% of those deaths are the result of human error,” said AAA’s Brannon. “The idea is that if you can remove that human error, the number will decrease. That’s not to say that the autonomous vehicles won’t make mistakes along the way.”

But saving lives as a selling point doesn’t necessarily add up yet, according to Professor Hancock. “The problem is that these systems cannot be free of flaw. It is just not physically possible. The flaw might be in the design, it might be in the software, it might be in the software integration. This is not going to be a collision-free system for many decades to come. In fact, autonomous systems will create their own new sorts of accidents. We are going to see these types of collisions coming up not despite automation, but because of some of the assumptions that they make.”

The hack is coming from inside the car

Not all the troublesome human behavior comes from outside the car, though. Drivers/passengers of any vehicle at any autonomous level may learn to hack the car to tone down or shut off features. For example, in June 2018, the U.S. Department of Transportation’s National Highway Traffic Safety Administration issued a cease-and-desist letter to the third-party vendor selling the after-market Autopilot Buddy, a device that reduces the Tesla’s nagging when hands are removed from the steering wheel.

AAA’s Brannon is concerned that human drivers may put too much trust in the semi-autonomous systems in their cars. “There’s a tradeoff,” he said. “It is going back to the human behind wheel, making sure that this human understands the benefits as well as the limitations of whatever technology the vehicle has that they are operating, whether that’s a 1990 Ford F150 or the latest Tesla. Everyone should understand the systems and not the vehicles that they are driving.”

Whether or not it becomes illegal some day for humans to drive a car, as Elon Musk mused at a 2015 Nvidia conference, one thing is for certain: The self-driving car will profoundly change our car culture forever.

—Ed Sperling contributed to this report.

What’s clear is the self-driving car won’t be the ideal getaway vehicle anymore, particularly if there is no steering wheel or gas pedal. And while it’s not hard to imagine a world in which technology controls people, the alternative script involves self-driving cars that are pushed around by human drivers, pedestrians, scooters, or even bicyclists.

“You can bully self-driving cars,” said Peter Hancock, Provost’s Distinguished Research Professor and head of the MIT² Laboratory at the University of Central Florida (UCF), where he studies autonomous vehicles and psychology. “Once you know the way it is going to react, you can move it over in its lane or make it decelerate, because you can be the bigger bully and you know that it is going to avoid hitting you. If you start edging into the lane it is going to start edging away from you.”

Who should fear whom? For now, people seem to be afraid of the self-driving car, and the numbers don’t seem to be improving. AAA reported in May 2018 that Americans are more afraid to ride in a fully autonomous vehicle than they were the prior year. In fact, AAA said 73% of driving Americans are afraid to ride in a fully self-driving vehicle.

“There may be some people who never trust an automated system,” said Hancock, who studies dimensions of trust of systems and humans in his lab. “When Tesla and Waymo are marketing, they have to market to people who are going to trust those systems.”

But if anyone needs to be afraid, it might be weighted heavily against self-driving car OEMs and their programmers.

“This will be a big problem, and so far we have not seen plans for a solution,” said Ty Garibay, chief technology advisor at ArterisIP. “The big problem is robots or autonomous things interacting with people. And while this may not be a problem in a factory, because people are paid to work with robots, it’s definitely an issue with autonomous vehicles in cities. I don’t see how autonomous vehicles will work in an urban area. You see the same thing happening in the home market with robots if a child knows it won’t do anything.”

The reason is simple—human beings are unpredictable, aggressive, and sometimes malicious creatures.

Mixed driving styles

This presents a problem because self-driving cars and human drivers will share the road for many years. “It’s going to be very interesting as we look to the future with that mixed fleet because the autonomous cars are driven and behave differently than their human counterparts, and that’s often a good thing,” Greg Brannon, director of automotive engineering at the American Automobile Association, told Semiconductor Engineering. “Self-driving cars are more cautious. They are more likely to obey speed limits. They are less likely to be distracted. All those things are good. But they will stand out among human-driven counterparts for some time, until the programming develops in the sensor fusion and all of the artificial intelligence to the point that they mimic a safe human driver.”

Different driving styles add to the complexity self-driving car programmers have to contend with. Cities and states vary in what is expected and how drivers interact. Human drivers and pedestrians have to learn these styles when visiting from a different place. It’s the same for a self-driving car?

“I just returned from in Italy,” said Hancock. “I did bit of driving myself. How does a self-driving car ever work in Italy, because you are constantly being cut off by Vespas?” He concluded it’s faster to walk.

There’s an unwritten code between humans that use the transportation system, he said, using Italy as an example. At first, such local systems don’t make sense to the stranger. But then the driver learns local ways drivers communicate with each other. Drivers in Italy don’t allow slow drivers to sit in the fast lane. A human gradually learns to appreciate why the local customs exist, but the self-driving car needs that to be programmed into its logic.

“If you traveled overseas or traveled enough around the U.S., you can begin to realize that different geographies have different driving styles and likely for the automation to function well, the automation might have to be geographically oriented,” Brannon agreed. ”In fact It’s a very complex task but as we look towards the future, that’s something to be considered.”

“Imagine a car—an autonomous vehicle—that waits for the proper break in traffic before it enters the Holland Tunnel. That autonomous car likely will be there for the next decade before it gets into the tunnel.” laughed Brannon. “There’s all those things that will have to be worked out. So it will be a mix and there’s a lot of very, very smart people that are working in this space but it is a big challenges.”

Cars will have to adapt, or they will have to be regulated.

“There are two scenarios unfolding here,” said Burkhard Huhnke, vice president of automotive strategy at Synopsys. “One is happening in China, where you can have a fully autonomous city and avoid hybrid mixes of human drivers and autonomous vehicles. The other is when you have a hybrid world of human-driven and autonomous vehicles, which is where we are today. The average time a car stays in the market is eight years, and within the next eight years you’re going to see more and more autonomous technology coming into the market. The big question is how that will affect your daily commute.”

It’s not just people that will cause the problems, though. How autonomous vehicles interact with other autonomous vehicles has yet to be ironed out, too.

“If your maximum speed is 10% lower than you competitor’s car, that can cause a problem,” Huhnke said. “Even with acceleration, does it go full-throttle or smoothly? Programming everything to work together is quite a challenge. Humans see a speed limit sign and sometimes we think we can go faster. So it’s a question of interpretation of rules. With self-driving cars, you have to build in flexibility to be able to deal with that.”

Bullying the robocar

Autonomous vehicles are expected to be predictable, polite and patient drivers. And humans will quickly learn how to push these types of suckers around. Will pedestrians start jaywalking more and jumping in front of self-driving cars that stop no matter what?

“Gosh, I sure hope not,” said AAA’s Brannon. “There’s something called physics that is involved, as well. Regardless of the technology, that’s very difficult to overcome.”

That may boil down to how human-like the technology can become. “The key right now is that people can tell it’s an autonomous car,” said Jack Weast, chief systems architect of autonomous driving solutions at Intel. “They drive conservatively, which makes it a target. There’s a technical reason for that, which is decision-making algorithms are implemented using reinforcement learning. It’s basically reward behavior, which could be time to destination or fuel economy. The problem with AI systems is that they’re probabilistic, so you’re dealing with a best guess. There are multiple sensors to recognize cars and pedestrians, so if one sensor misses another picks it up. But there is no redundancy in the decision-making. And because a wrong decision may cause an accident, the weight for safety has a higher reward. That’s why autonomous vehicles are overly conservative. You see them jerking back into their lane. You also see human drivers getting annoyed by them.”

Intel’s approach to solving this problem is to create an AI stack, rather than an end-to-end system, establishing a hierarchy of AI functions. At the top is what it calls Responsibility Sensitive Safety, which the company has published as open source.

Fig. 1: Dealing with unpredictability using RSS. Source: Intel/Mobileye

“This allows a car to be more assertive and human-like,” said Weast. “If I’m sitting in the back seat of an autonomous car I can’t tell if it’s being driven by a person or whether it’s autonomous. This is where we’re heading. It’s a mixed environment future. Even in New York City, a human driver will not hit the brake pedal until the last possible second. Autonomous vehicles can do this, too. They can wedge into traffic to create space, look for a vehicle to give way, and back up into their lane if they don’t. The origins of this approach go back to safety, and the safety value of RSF is that it can be formally verified. This is a lot different than the current industry approach of, ‘Here’s a black box, trust me.’ It doesn’t matter if you’ve driven 1 million miles. End-to-end AI approaches are dangerous. You need layering or stacking or stages, so if one algorithm changes the independent safety seal remains static.”

Another issue is the safest traveling distance, said UCF’s Hancock. A safe traveling distance can be really far behind the car it is following—or just one foot away. “The reason one foot proves to be safe is because the car in front can only change velocity a very little amount, so there is not enough space to cause a collision.” If a one-foot driving distance is also one of the optimal following distances that the automated vehicle can choose, it can cause panic unless the human driver knows this is a safe self-driving car behind him.

Siemens has developed a verification and simulator system that helps discover edge cases with virtualization engine and simulator. These edge cases can be used for training self-driving cars. When Google drives millions of road tests, “what they’re looking for is the edge cases where some complex traffic scenario appears pedestrians and whatever else,” said Andrew Macleod, director of automotive marketing at Mentor, a Siemens Business. “That can all be done in the virtual world, so Siemens has got a virtualization capability where we can create vehicle scenarios against pedestrians coming in front of a vehicle at a specific speed, and so on. We can simulate all of the different data from sensors, whether it’s a camera, radar, LiDAR. And then we can add weather, for example. How does the camera sensor respond if it is snowing? We model all of that and create these edge cases, and then feed that data into a vehicle simulator to see how the vehicle responds. For example, you might have some scenario where the vehicle has to swerve to avoid the obstacle, pedestrian or whatever else. We can actually model how the vehicle behaves in an extreme example.”

What isn’t obvious is how people will react. “Automotive OEMs are dealing with this today from the driver and pedestrian side,” said Tim Lau, senior director of automotive product marketing at Marvell. Current collision avoidance systems may stop the human driver from trying the bullying. “They really want to have systems that predict what the driver intends to do. To be able to predict that, there’s a lot of information that the car needs to understand, including the driver’s state. There are so many technologies out that, like ADAS for collision avoidance.”

But the pedestrian who bullies? “Once I asked the car OEMs how they protect for that,” said Lau. “What is the plan? The systems are designed to protect and gather data and make best decisions, but if someone wants to be malicious and step in front of the car, I don’t think there is a system in place today that can deal with that.”

Creating systems that communicate with pedestrians can help. These so-called V2x systems have many uses, and there are a variety of technologies under development around the world. “This will be different technologies, like the smart garage has sensors that tell you what spot is taken or not,” said Lau. “Having that ability to have car-to-car, car-to-person, car-to-infrastructure communication is vital. It can tie into your cell phone or watch. Even the visibility around a corner will be possible.”

The real turning point, though, will be the smart city. “What the smart city offers you that sensors like LiDAR and camera and radar don’t is vehicle-to-vehicle communication and vehicle-to-infrastructure,” said Mentor’s Macleod. “The question of autonomous vehicles bullying each other and nudging each other on the road wouldn’t happen once we can communicate with each other because the most efficient path to get from A to B will be defined, with a driving scenario mapped out in terms of when to accelerate and when to brake. Eventually, traffic lanes probably wouldn’t be needed. The cars will just communicate with each other.”

Reaching for Level 5

Despite Volvo’s recently announced concept for autonomous commuter pods, where passengers can sit at a table or sleep in a bed as a vehicle traverses hundreds of miles of roadway, the short-term future appears to be much more modest. Most likely the first self-driving cars will be used for ride sharing in limited areas of a city. Along with that, fleets of trucks that cruise the freeways at night are likely to deliver goods to a simple end point.

Fig. 2: Falling asleep at the wheel has new meaning in Volvo’s concept robocar 360c. Source: Volvo

“We don’t think it’s going to be anything like there’ll be more self-driving cars and all of a sudden the streets will be flooded with them everywhere,” said Macleod. “But you can put a bunch of self-driving trucks on the road at night going from point A to Point B very predictable. After that, campuses and maybe airports, followed by something like a downtown area maybe three to five square miles, which would be fully autonomous with autonomous robotaxis.”

This matches Ford’s current prediction—urban mobility and delivery services—as possible first uses for the automated system. The company is working on this plan with startup Argo AI. Ford has set a goal of having self-driving cars on the road by 2021, but Bryan Salesky, Argo’s CEO said the technology won’t be released until it is ready

“Every day that we test we learn of and uncover new scenarios that we want to make sure the system can handle,” said Salesky. Ford is testing the cars in Miami-Dade County in Florida. Even then, the car will drive in certain cities in certain closed geo-fenced locations and will function as a ride sharing service. The other option is automated trucking at night.

Ford uses two humans inside the test car monitoring what is happening in the road tests and ready to take over the driving if needed. The humans report back to Argo if odd interactions happen around the vehicle concerning pedestrians or other vehicles. “In the cities we operate in, we haven’t seen any reactions that are different than what you would normally see,” said Salesky. “They very much treat us like any other vehicle. We have actually gotten a positive response from the community in that our vehicles are cautious. They don’t get distracted. They are able to stop with plenty of margin before a crosswalk to let people use the crosswalk. Our car won’t pull into a crosswalk and block it. Our vehicle will nudge over and give pedestrians who are jogging along the side of the road a little more room.”

Ford is also experimenting with communicating with pedestrians using lights. This still has a long way to go. “What we do is over time is build up this database of regression tests that need to pass before a new line of code can be added to the system. That scenario set is a really critical piece of our development process,” said Salesky, who got his start working on DARPA vehicles as part of Carnegie Mellon University National Robotics Engineering Center and on Google’s self-driving car.

Argo and Ford don’t push out code to the cars before it is tested thoroughly and shown to be error-free, even if the change could be a simple change that fixes one problem. Argo owns its own AI database and coding.

Will self-driving cars save lives?

The semiconductor and automotive industries contend that self-driving vehicles will save lives. Some academics agree that the conservative, law-abiding self-driving car will do just that. But there’s a catch.

There are about 35,000 to 40,000 vehicle-related fatalities a year in the United States, depending on who’s counting. The National Safety Council is spearheading an effort to get the number down to zero by 2050 with the Road to Zero coalition. But drill down into those numbers and motorcycles were involved in 14% of the deaths in 2014, says NSC. Moreover, almost half of roadway deaths occur on rural roads, which are the most dangerous roads to drive on.

So will self-driving cars make a dent in those numbers any time soon? Where speed and impairment (drunk, drugged, drowsy, or texting) are factors in the deaths, where the self-driving car may have a big role to play. The coalition concluded that autonomous driving assistance systems will help, it noted they are only part of the solution. They also want to engender a culture of zero tolerance for unsafe behavior, along with better roadways and infrastructure. (Their report is here).

“We continue to see deaths on the American roadway, some 35,000 annually, and somewhere between 80% and 90% of those deaths are the result of human error,” said AAA’s Brannon. “The idea is that if you can remove that human error, the number will decrease. That’s not to say that the autonomous vehicles won’t make mistakes along the way.”

But saving lives as a selling point doesn’t necessarily add up yet, according to Professor Hancock. “The problem is that these systems cannot be free of flaw. It is just not physically possible. The flaw might be in the design, it might be in the software, it might be in the software integration. This is not going to be a collision-free system for many decades to come. In fact, autonomous systems will create their own new sorts of accidents. We are going to see these types of collisions coming up not despite automation, but because of some of the assumptions that they make.”

The hack is coming from inside the car

Not all the troublesome human behavior comes from outside the car, though. Drivers/passengers of any vehicle at any autonomous level may learn to hack the car to tone down or shut off features. For example, in June 2018, the U.S. Department of Transportation’s National Highway Traffic Safety Administration issued a cease-and-desist letter to the third-party vendor selling the after-market Autopilot Buddy, a device that reduces the Tesla’s nagging when hands are removed from the steering wheel.

AAA’s Brannon is concerned that human drivers may put too much trust in the semi-autonomous systems in their cars. “There’s a tradeoff,” he said. “It is going back to the human behind wheel, making sure that this human understands the benefits as well as the limitations of whatever technology the vehicle has that they are operating, whether that’s a 1990 Ford F150 or the latest Tesla. Everyone should understand the systems and not the vehicles that they are driving.”

Whether or not it becomes illegal some day for humans to drive a car, as Elon Musk mused at a 2015 Nvidia conference, one thing is for certain: The self-driving car will profoundly change our car culture forever.

—Ed Sperling contributed to this report.