In the midst of the COVID-19 pandemic, in 2020, many research groups sought an effective method to determine mobility patterns and crowd densities on the streets of major cities like New York City to give insight into the effectiveness of stay-at-home and social distancing strategies. But sending teams of researchers out into the streets to observe and tabulate these numbers would have involved putting those researchers at risk of exposure to the very infection the strategies were meant to curb.

Researchers at New York University’s (NYU) Connected Cities for Smart Mobility towards Accessible and Resilient Transportation (C2SMART) Center, a Tier 1 USDOT-funded University Transportation Center, developed a solution that not only eliminated the risk of infection to researchers, and which could easily be plugged into already existing public traffic camera feeds infrastructure, but also provided the most comprehensive data on crowd and traffic densities that had ever been compiled previously and cannot be easily detected by conventional traffic sensors.

To accomplish this, C2SMART researchers leveraged publicly available New York City Department of Transportation (DOT) video feeds from the cover over 700 locations throughout New York City and applied a deep-learning, camera-based object detection method that enabled researchers to calculate pedestrian and traffic densities without ever needing to go out onto the streets.

“Our idea was to take advantage of these DOT camera feeds and record them so we could better understand social distancing behavior of pedestrians,” said Kaan Ozbay, Director of C2SMART and Professor at NYU.

To do this, Ozbay and his team wrote a “crawler”—essentially a tool to index the video content automatically—to capture the low-quality images from the video feeds available on the internet. They then used an off-the-shelf deep-learning image-processing algorithm to process each frame of the video to learn what each frame contains: a bus, a car, a pedestrian, a bicycle, etc. The system also blurs out any identifying images such as faces, without impacting the effectiveness of the algorithm.

The system developed by the NYU team can help inform decision-makers’ understanding of a wide-range of questions ranging from crisis management responses such as social distancing behaviors to traffic congestion

“This allows us to identify what is in the frame to determine the relationship between the objects in that frame,” said Ozbay. “Then, based on a new method that obviates the need for actual in-situ referencing we devised, we’re able to accurately measure the distance between people in the frame to see if they are too close to each other, or it’s just too crowded.”

The easy thing would have been to just count how many people were within each frame. However, as Jingqin Gao, Senior Research Associate at NYU, explained, the reason they pursued an object detection method rather than mere enumeration is because the public feed is not continuous, with gaps lasting several seconds throughout the feed.

“Instead of trying to very accurately count pedestrians crossing a line, we are trying to understand pedestrian density in urban environments, especially for those places that are typically crowded, like bus stops and crosswalks,” said Gao. “We wanted to know whether they were changing their behavior amid the pandemic.”

Gao explained that the aim was to determine the pedestrian density and pedestrian social distancing patterns at scale and see how those patterns have changed since pre-COVID conditions, instead of tracking individual pedestrians.

“For instance, we wanted to know if there was a change from pre-COVID when people were going out in the early morning for commuting purposes versus during the lockdown when they might be going out later in the afternoon,” she added. “By exploring these different trends, we were trying to better understand if there are new patterns during and after the lockdown.”

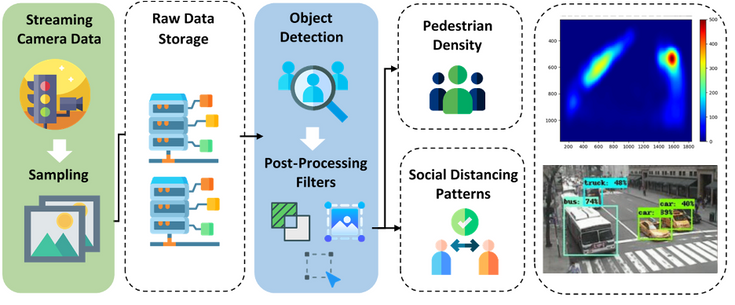

Camera data acquisition and pedestrian detection framework.

C2SMART Center/New York University

In general, these kinds of short count studies in traffic engineering only cover a few hours over several days, according to Ozbay. In those studies, people go out and collect data, and then they process it manually, even sometimes having to count cars by hand, for example. But this method would be impossible at the scale of C2SMART’s work, Ozbay explained; in order to cover the hundreds of locations with 24-hour coverage over many months, the job has to be performed by an artificial intelligence (AI) algorithm instead of human or conventional traffic counters.

There are complications that the AI has to overcome from each video feed: the locations are different, the camera angles and height are different, and they are subject to different lighting and positional factors. “It’s not like the AI can learn just one intersection and automatically apply it to another one. It needs to learn each intersection individually,” added Ozbay.

To enable this AI solution, the C2SMART researchers started with an object detection model, namely You Only Look Once (YOLO), which is pre-trained using Microsoft’s COCO data set. Gao explained that they also retrained and localized this object detection model with additional images and various customized post-processing filters to compensate for the low-resolution image produced by New York City DOT video feeds.

Screenshot of the COVID-19 Data Dashboard created as part of the project.

C2SMART Center/New York University

While the off-the-shelf object detection model could work in this instance with some customization, when it came to measuring the distances between the objects, the NYU researchers had to develop a novel algorithm, which they refer to as a reference-free distance approximation algorithm.

“If you’re measuring something from an image, you may need some reference point,” said Gao. “Historically, researchers might need to actually go to the site and measure the distance. But with our methodology, we can use the pixel size on the image of the person and the real height of that person to determine distance.”

While this project was inspired by the COVID-19 pandemic, the fast-moving nature of the disease precluded these findings from significantly impacting New York City’s COVID policies. However, the project has produced a COVID-19 Data Dashboard and a video of how it was developed and operates is provided below.

Ozbay explained that the project demonstrated to several city agencies that they were sitting on very valuable actionable data that could be used for many different purposes.

“City agencies have approached us on several projects that are related to this one, but in a different context,” said Ozbay. “Now we are working with New York’s Department of Design and Construction (DDC) and the DOT to use the same kind of approach to analyze traffic around work zones and other key facilities such as intersections and on-street parking without them needing to actually go out to those locations.”

Ozbay notes that this initial project for COVID-19 has opened up possibilities for this kind of AI algorithm analysis of video feed data to be applied to a wide range of projects to provide critical understanding in a more efficient way.

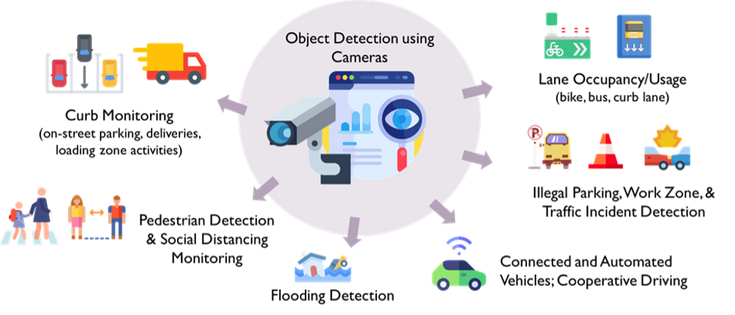

Potential applications of AI-powered object detection using city cameras.

C2SMART Center/New York University

Ozbay believes that much of the process NYU has developed can be handled internally by IT experts within their organization. For example, they should be able to handle the data acquisition and saving of it. But Ozbay believes that on the AI issues they will likely need to lean on experts within the academic or commercial realm to help them with this, since AI is always in a state of development, on a nearly monthly basis.

“This solution will never become like Microsoft Word,” said Ozbay. “It will always require some improvements and some changes and tweaking for the foreseeable future.”

Gao, who used to work for the DOT before taking on her current role, added that there’s always a steady stream of commercial entities offering the DOT their product suites. “These commercial solutions frequently recommend buying and installing new cameras,” she said. “What we have demonstrated here is that we can provide a solution based on current infrastructure.”

Based on his experience working with other cities and states throughout his career, Ozbay mentioned that most cities throughout the United States employ similar kinds of traffic camera systems used in New York City.

“This method allows for cities throughout the country to provide a dual or triple usage of their existing infrastructure,” said Ozbay. “There are a lot of opportunities to do this at a large scale for extended periods with little to no infrastructure cost.”

Example of other smart city use cases using this research framework: (a) detecting parking occupancy; (b) monitoring bus lane usage; (c) identifying illegal parking/double parking, (d) tracking and counting vehicles; and (e) using pedestrian density info at bus stops to assess transit demand.

Ozbay hopes the success of the technology will lead to other DOTs across the country learning of the technology and taking an interest in adopting it themselves. “If you can make it in New York, you can make it anywhere,” he quipped. “We’ll be happy to share with them our code and anything that may be of value to them from our experience.”

While the final product of this research may change the way traffic information has collected and used, it has also served as an important training tool for NYU students—not just postdoctoral researchers, but two undergraduate students at NYU’s Tandon School of Engineering as well.

“Our aim as an engineering school is not just to write papers, but to develop products that can be commercialized, and also to train the next generation of engineers on real projects where they can see how engineering contributes to and can help improve society,” said Ozbay.

Gao and Ozbay added that the two undergraduate students who worked on this project for two years are going on to graduate school to study along the lines of this project. “These students come to us without much knowledge, they become exposed to different research, and we let them pick what they are interested in. We train them very slowly,” said Ozbay. “If they remain interested, they eventually become part of our research team.”

In future research, Ozbay envisions their work moving from just object recognition to building trajectories from these video feeds. If they are successful in this goal, Ozbay believes it has huge implications for applications like real-time traffic safety, an emerging area of research C2SMART is a major player.

He added: “With trajectory building we can see the movement of vehicles in relation to each other as well as to pedestrians. This will not only help us identify risks in real-time but also establish and implement measures to mitigate those risks using advanced versions of methods we have already developed in the past.

Link: https://spectrum.ieee.org/nyu-c2smart-ai-video-project

Source: https://spectrum.ieee.org

Leave a Reply